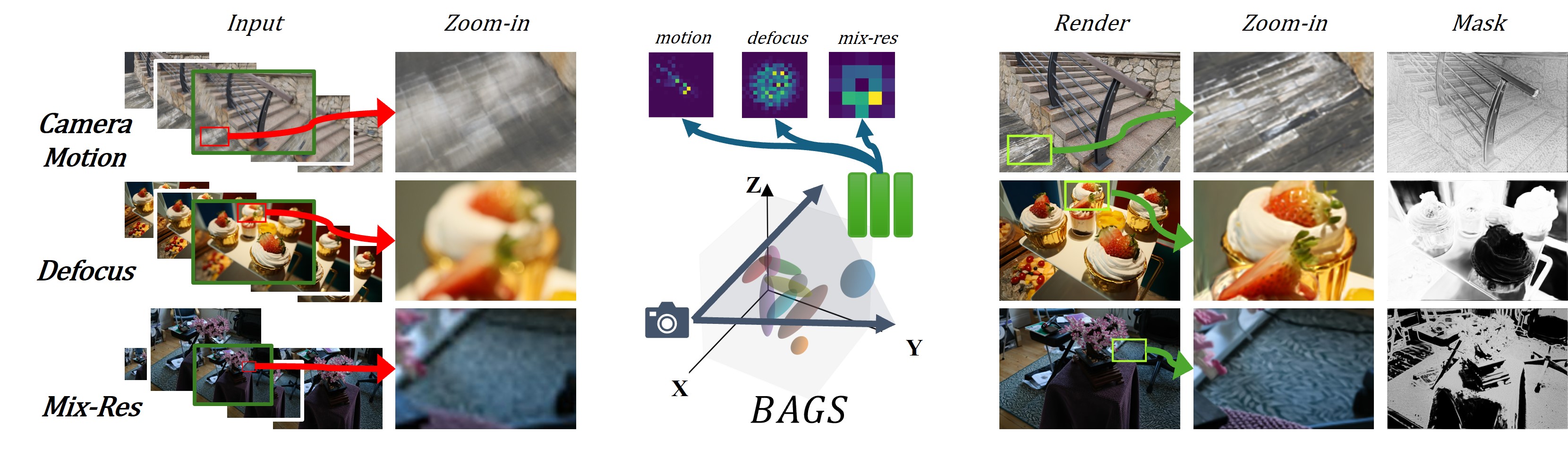

We introduce Blur Agnostic Gaussian Splatting, or BAGS, a Splatting-based method that is robust against various types of blur.

Recent efforts in using 3D Gaussians for scene reconstruction and novel view synthesis can achieve impressive results on curated benchmarks; however, images captured in real life are often blurry. In this work, we analyze the robustness of Gaussian-Splatting-based methods against various image blur, such as motion blur, defocus blur, downscaling blur, etc. Under these degradations, Gaussian-Splatting-based methods tend to overfit and produce worse results than Neural-Radiance-Field-based methods. To address this issue, we propose Blur Agnostic Gaussian Splatting (BAGS). BAGS introduces additional 2D modeling capacities such that a 3D-consistent and high quality scene can be reconstructed despite image-wise blur. Specifically, we model blur by estimating per-pixel convolution kernels from a Blur Proposal Network (BPN). BPN is designed to consider spatial, color, and depth variations of the scene to maximize modeling capacity. Additionally, BPN also proposes a quality-assessing mask, which indicates regions where blur occur. Finally, we introduce a coarse-to-fine kernel optimization scheme; this optimization scheme is fast and avoids sub-optimal solutions due to a sparse point cloud initialization, which often occurs when we apply Structure-from-Motion on blurry images. We demonstrate that BAGS achieves photorealistic renderings under various challenging blur conditions and imaging geometry, while significantly improving upon existing approaches.

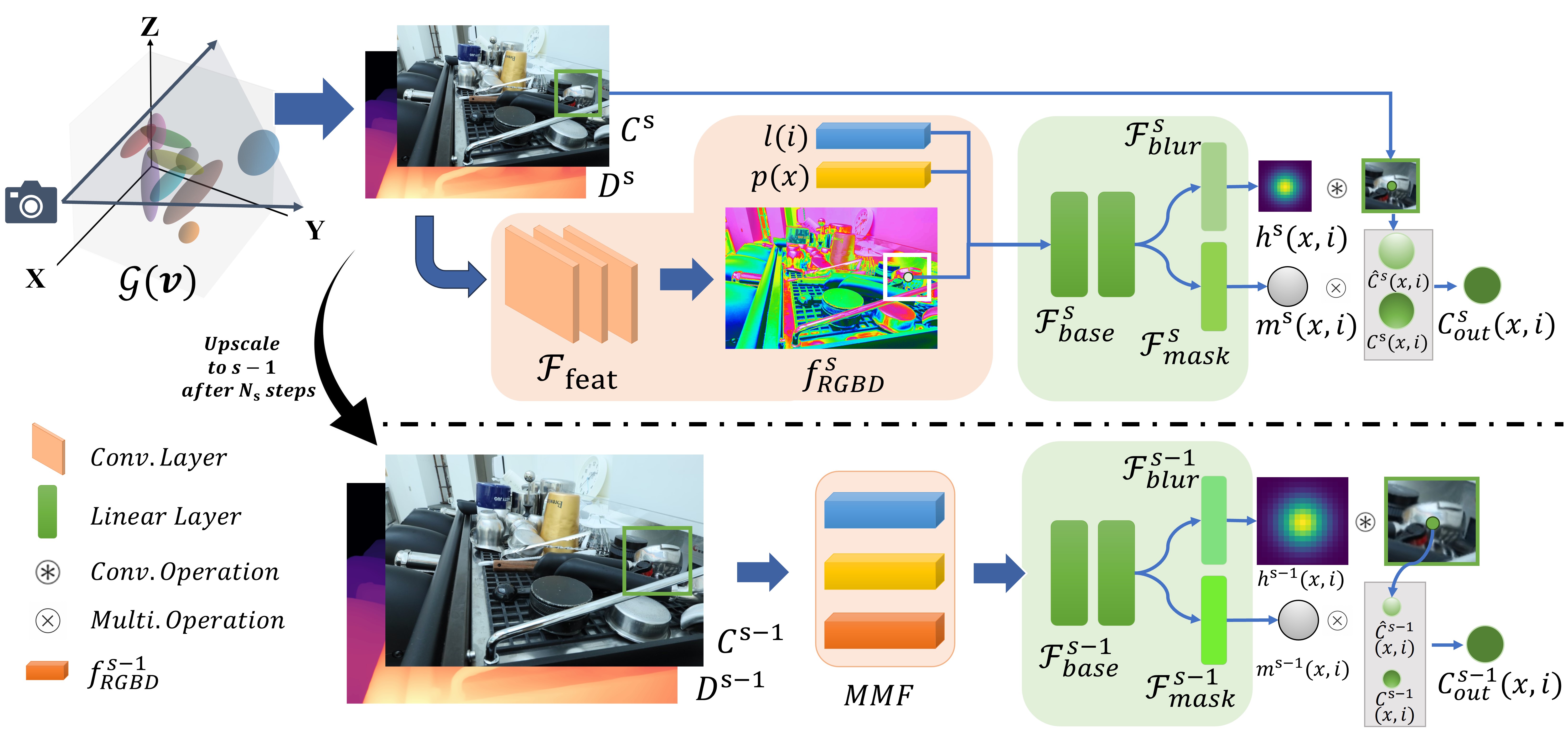

BAGS is optimized by training a Blur Proposal Network (BPN) on top of the scene over multiple scales. In BPN, we first extract features from color and depth, which are concatenated with the position and view embedding to form the Multi-Modal Feature (MMF). Then, the kernel MLP estimates the per-pixel kernel and mask. We use the kernel to model the blur image and employ the mask to blend the rendered image and the blur-modeled image, yielding the final output image. In terms of the coarse-to-fine optimization scheme, we upscale the image resolution after a certain steps and modify the kernel MLP to produce a kernel with a larger kernel size.

We show additional results of BAGS on various datasets. In this section, we provide some teasers for comparing BAGS with Mip-Splatting. Users can use the sliding bar to compare the results back and forth.

While BAGS is a generalist approach and addresses multiple types of blur, it may become very expensive to estimate per-pixel convolution in high resolution. To this end, we have explored utilizing pixel shuffling before we estimate blur kernel. This assumes that within a small region, blur kernel stays roughly consistent. Through this approach, we can scale to great visual results at 2K resolution on the Deblur-NeRF dataset. Here, we provide visual comparisons between the results of BAGS at 0.6K and 2K resolution.

BAGS models image degradation through convolution kernels and masks. By constraining on its sparsity, the mask meaningfully highlights the regions of blur in an given training image. When visualizing the kernels modeled at different pixel, we can also easily characterize the types of observed blur. Specifically, the estimated kernels in camera motion blur exhibit clear patterns of camera movement, while those for defocus blur show Gaussian-like distributions based on the pixel's distance from the focus plane. These self-emerged properties in BAGS provide ways for us to automatically and precisely evaluate the quality of training images. Here, we provide additional visualizations of the kernels and masks estimated by BAGS.

@article{peng2024bags,

title={BAGS: Blur Agnostic Gaussian Splatting through Multi-Scale Kernel Modeling},

author={Peng, Cheng and Tang, Yutao and Zhou, Yifan and Wang, Nengyu and Liu, Xijun and Li, Deming and Chellappa, Rama},

journal={arXiv preprint arXiv:2403.04926},

year={2024}

}